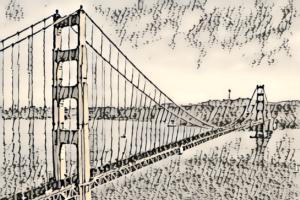

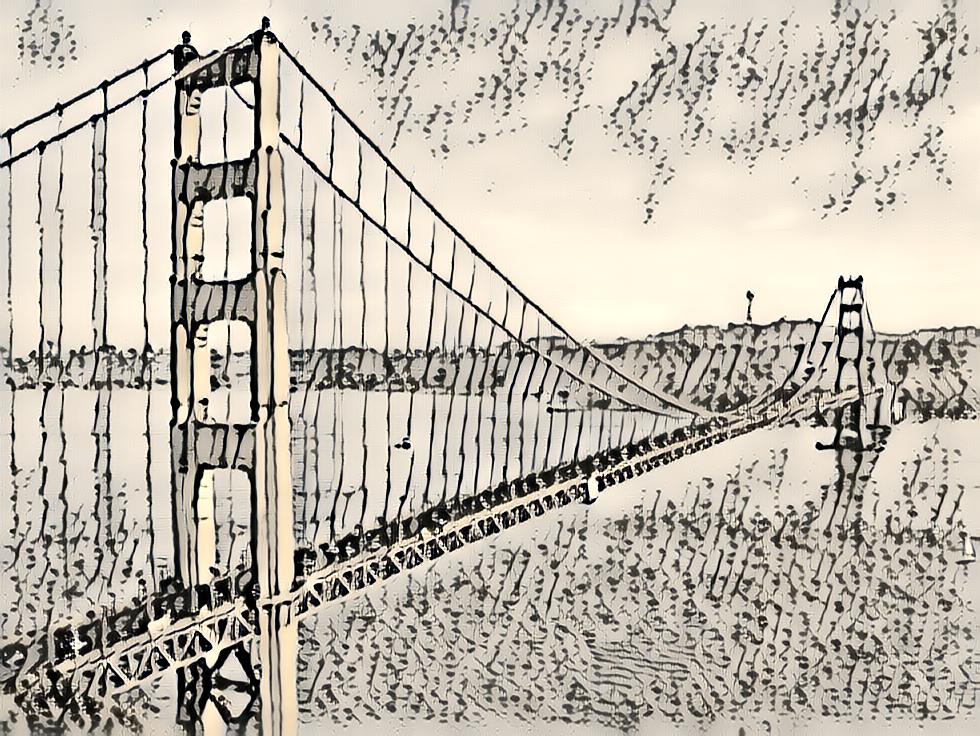

This page contains pre-trained models and examples from my implementation of style transfer algorithms. Everything here is based on the method described by Justin Johnson, Alexandre Alahi, and Fei-Fei Li in Perceptual Losses for Real-Time Style Transfer and Super-Resolution. My implementation has some modifications, the details of which can be found on the GitHub page. There, you can also find instructions for training models, and using them to style images.

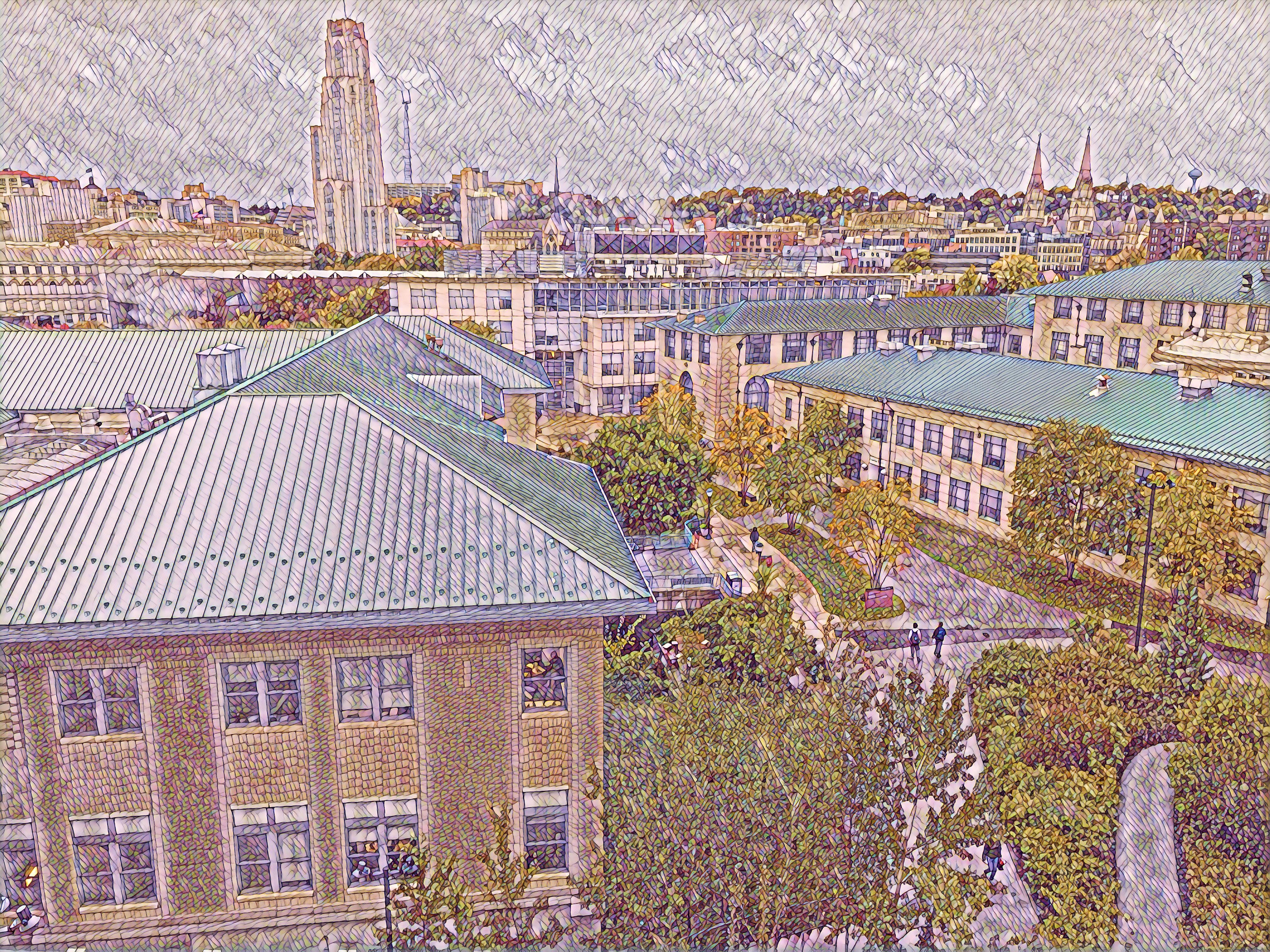

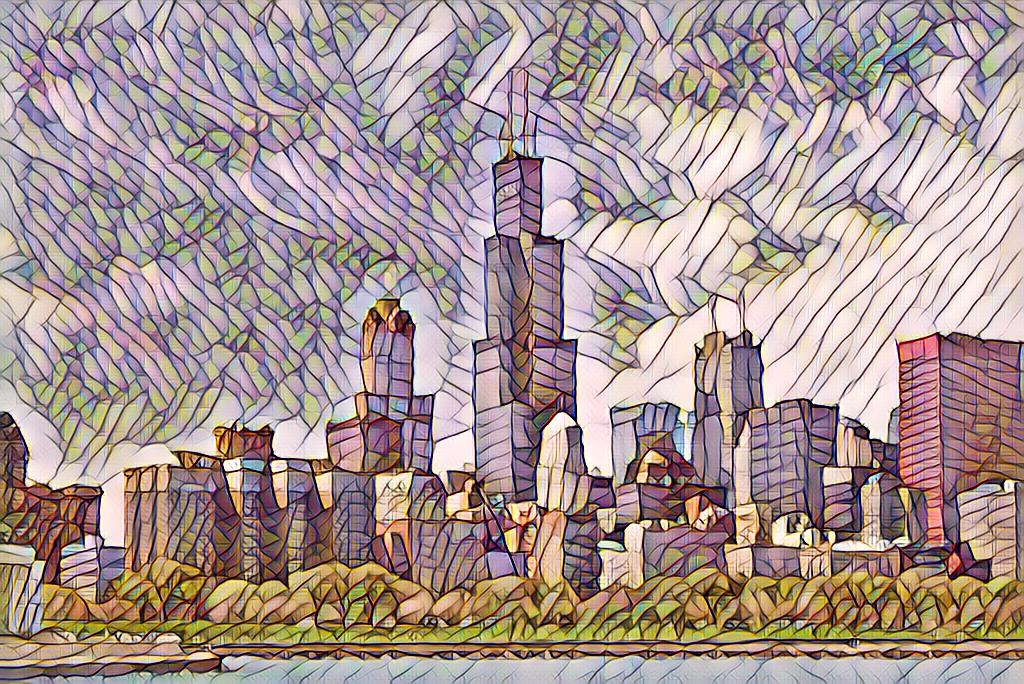

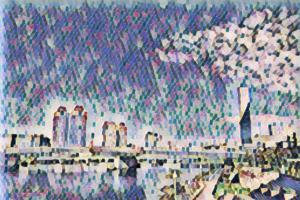

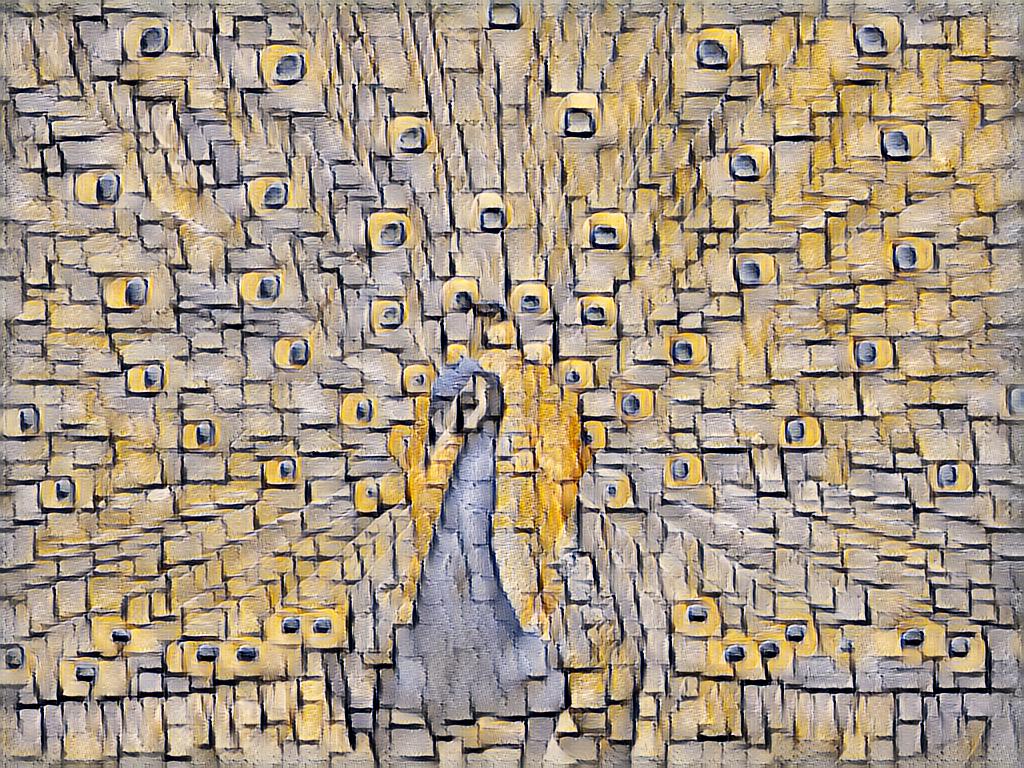

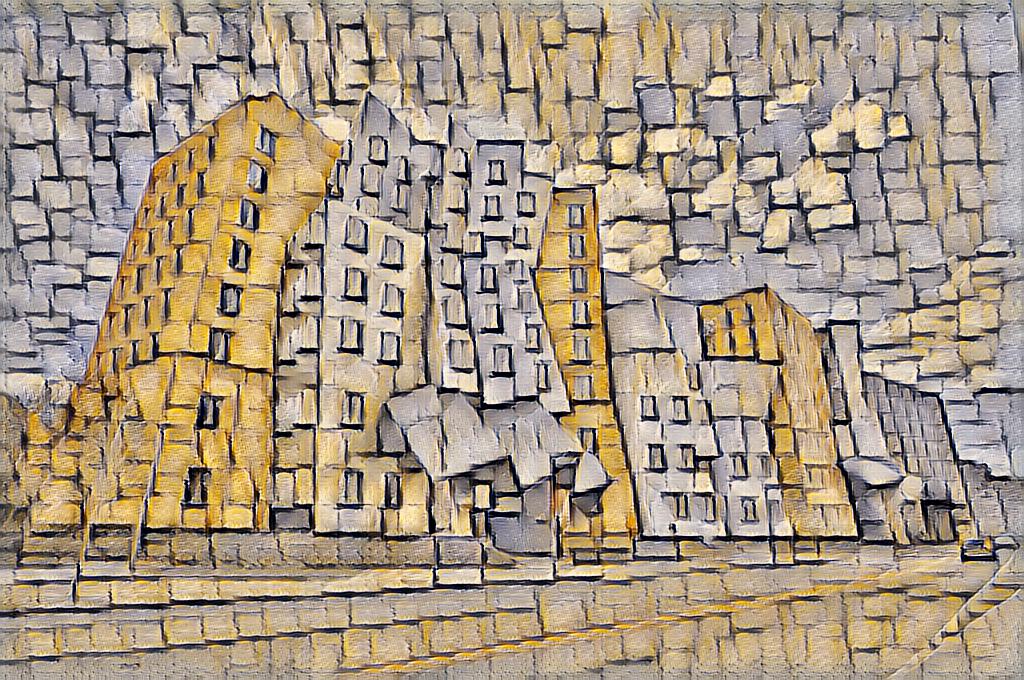

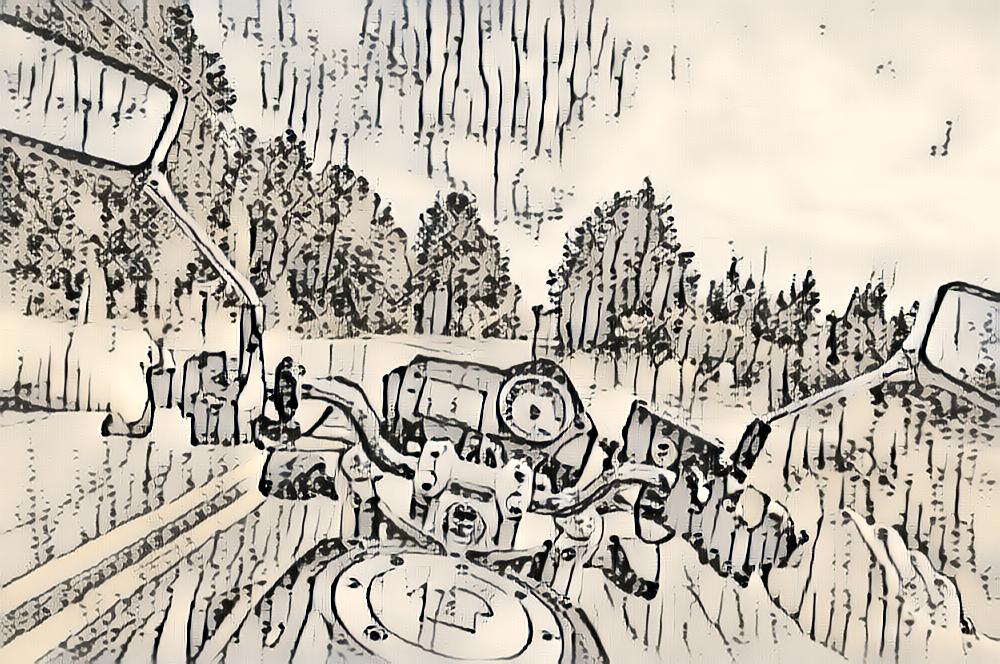

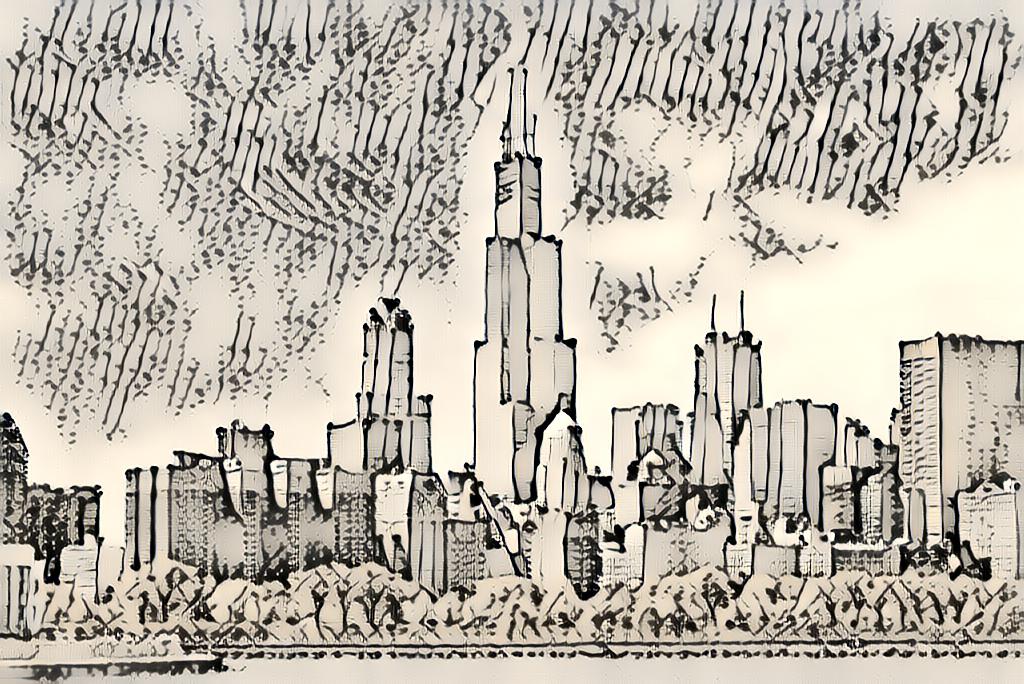

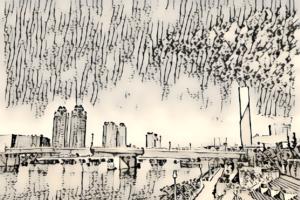

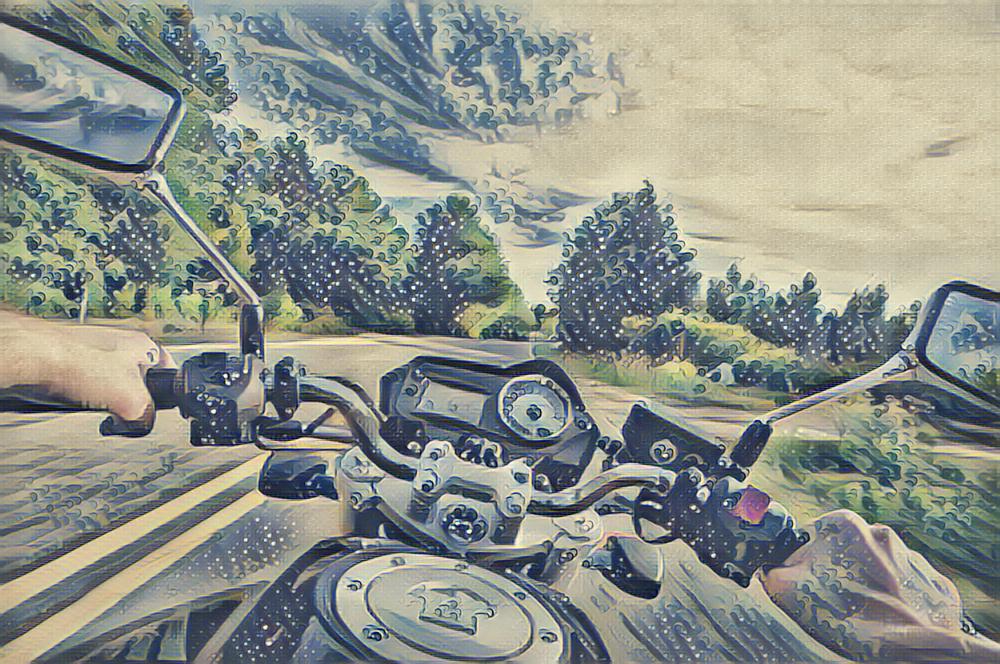

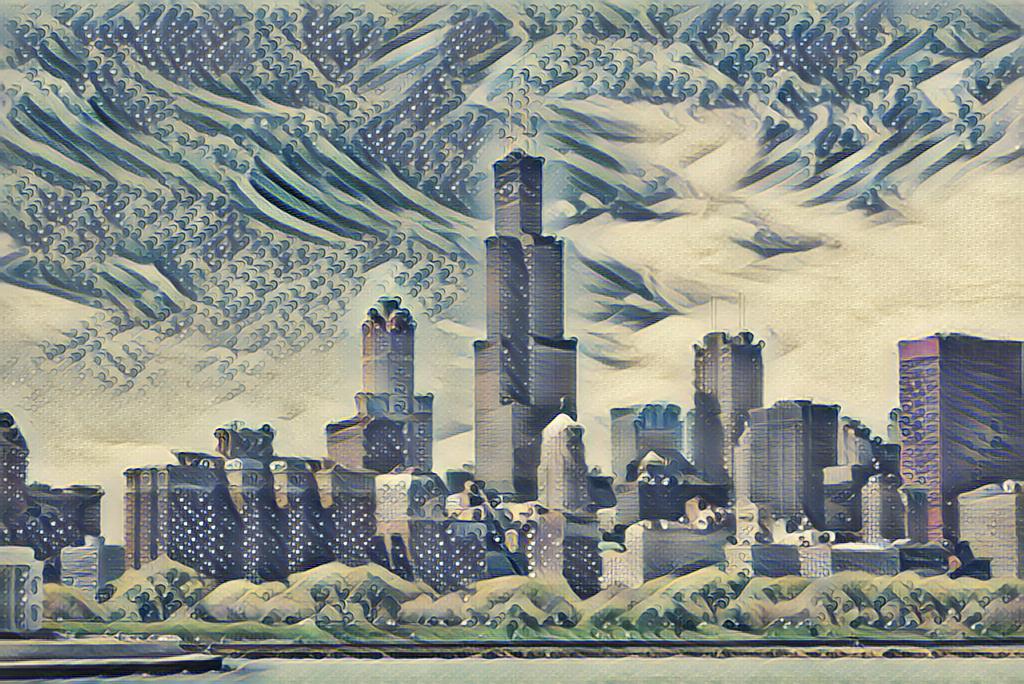

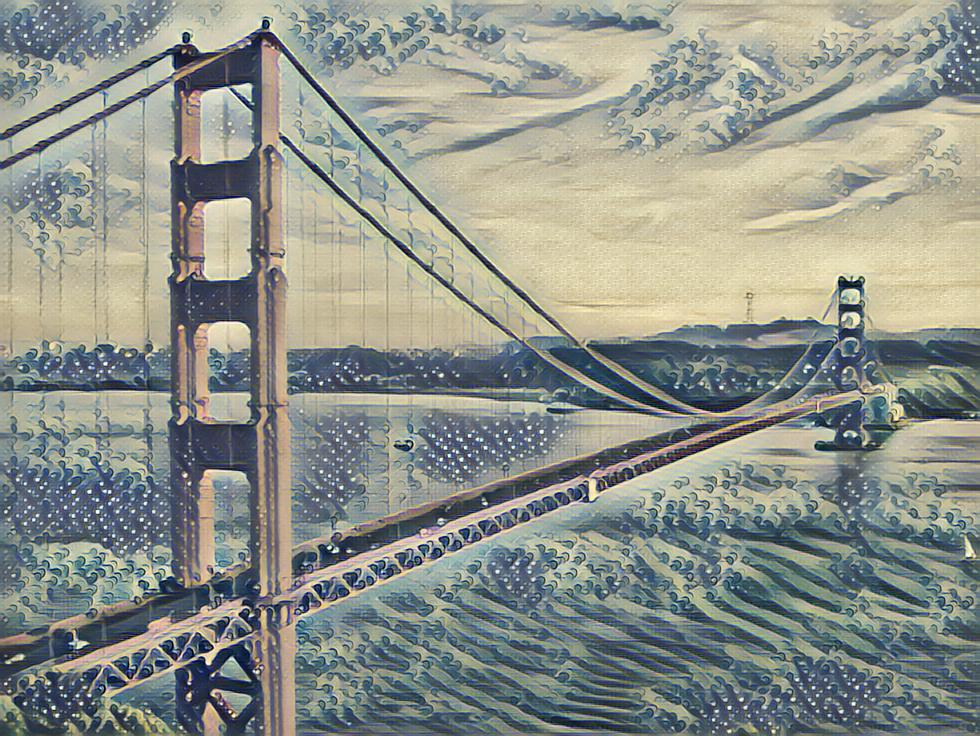

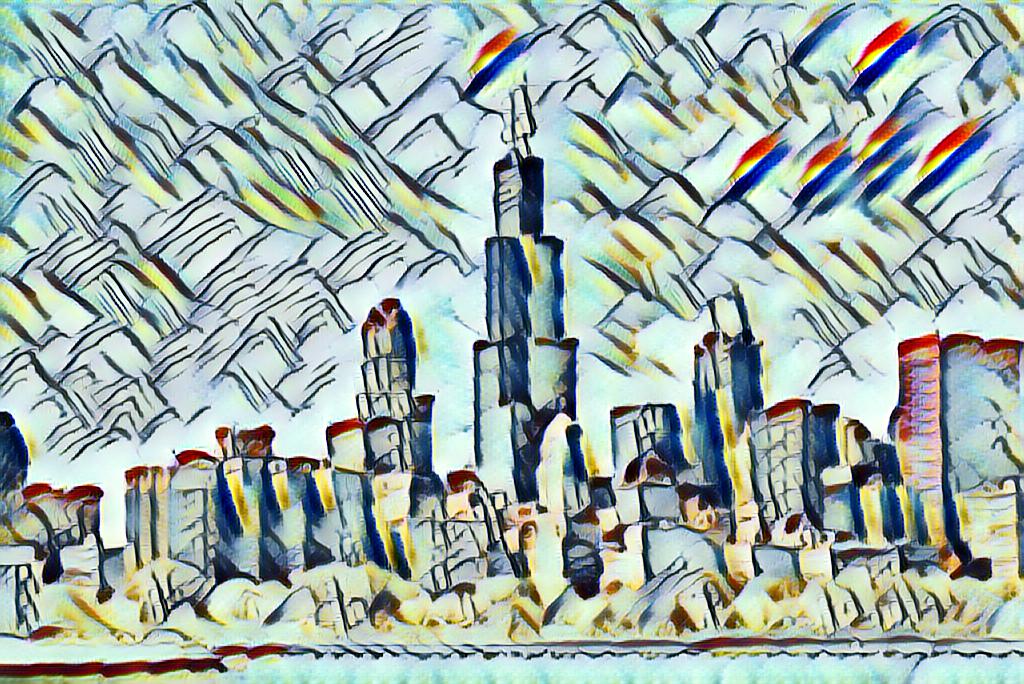

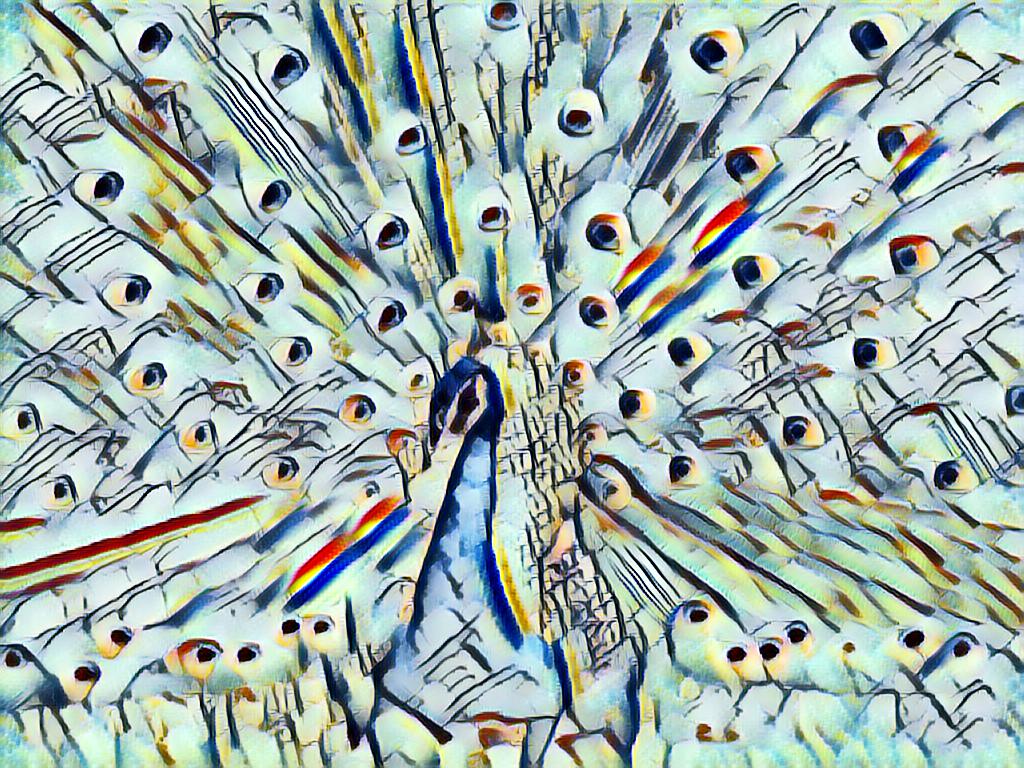

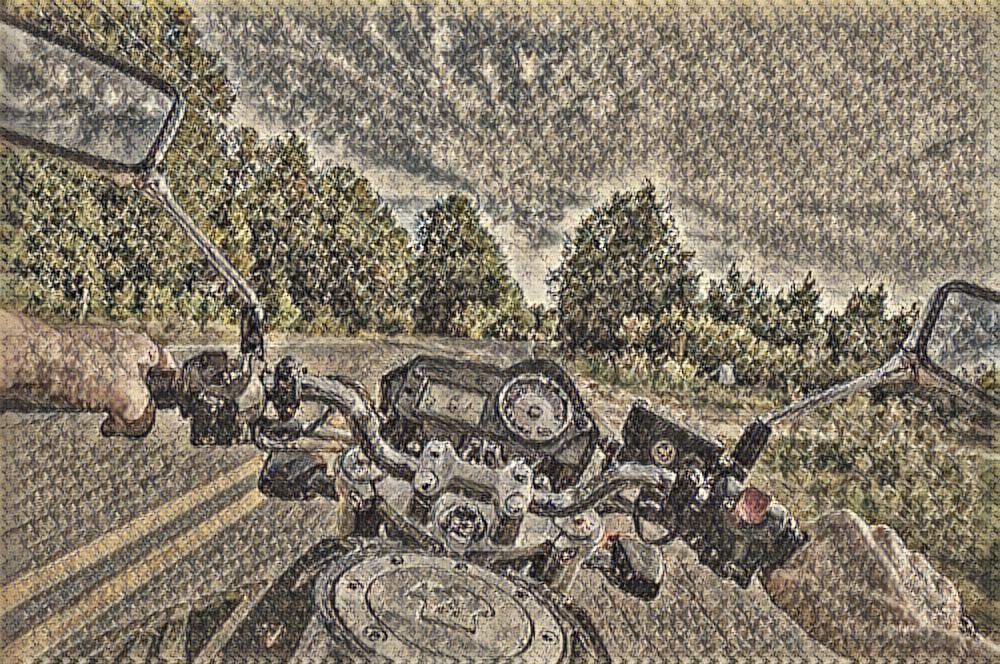

With a trained model, you can process images super fast - all it takes is a single pass through a convolutional neural network. Of course this needs a GPU which means you can’t use very large images due to the limited memory on GPUs. You can style much larger images on a CPU but it will take a lot of time and memory. Styling this photo (4032x3024) took more than 5 minutes, and about 120 gigabytes of memory; but it makes for a pretty cool wallpaper.

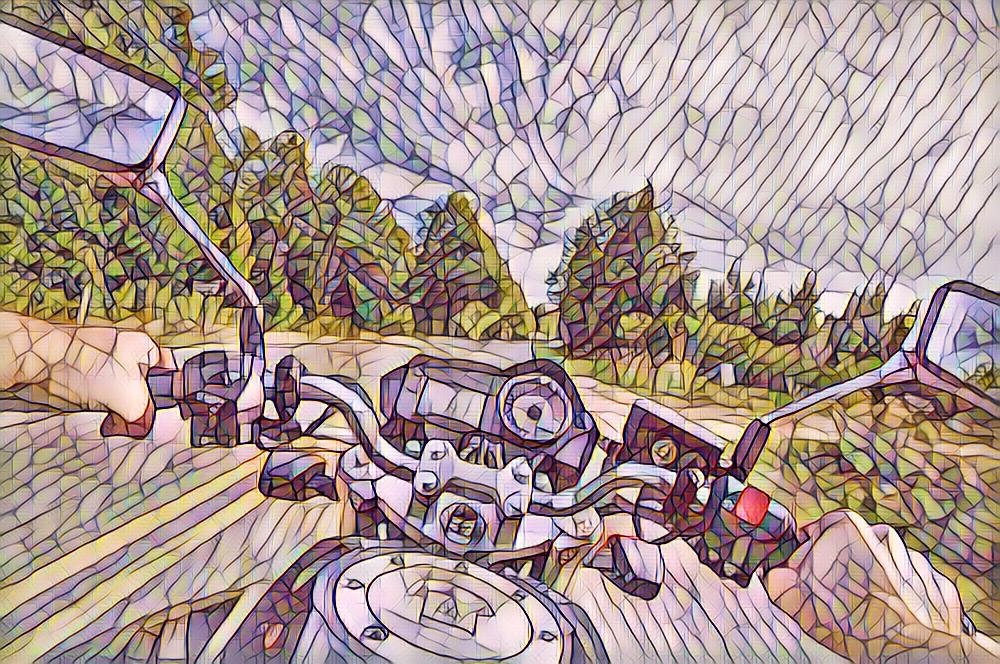

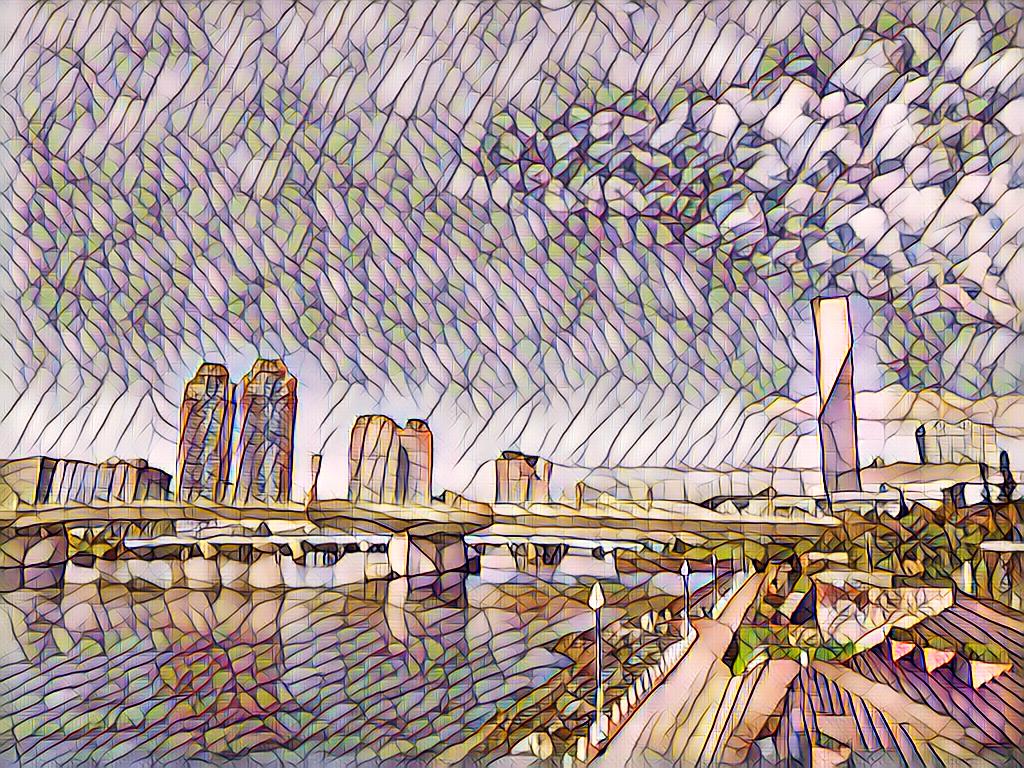

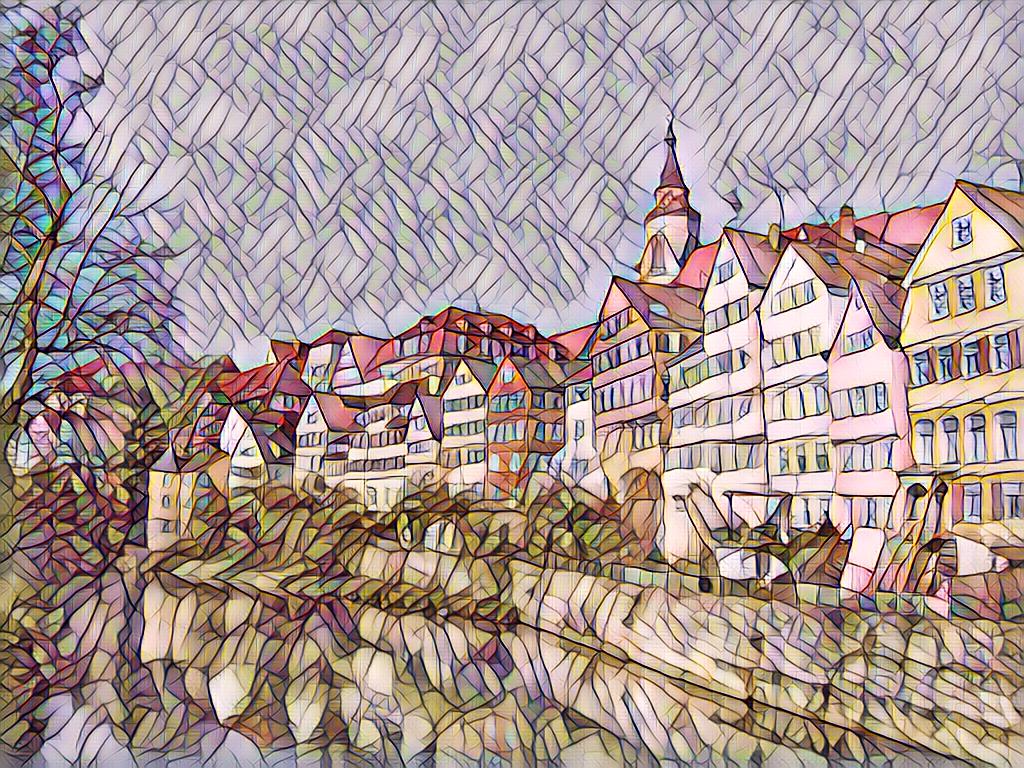

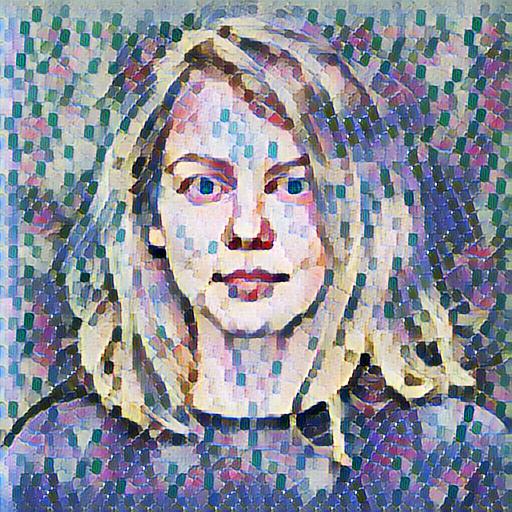

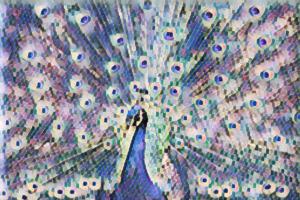

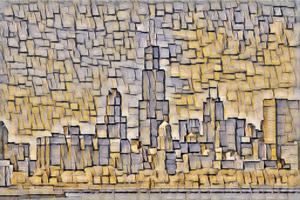

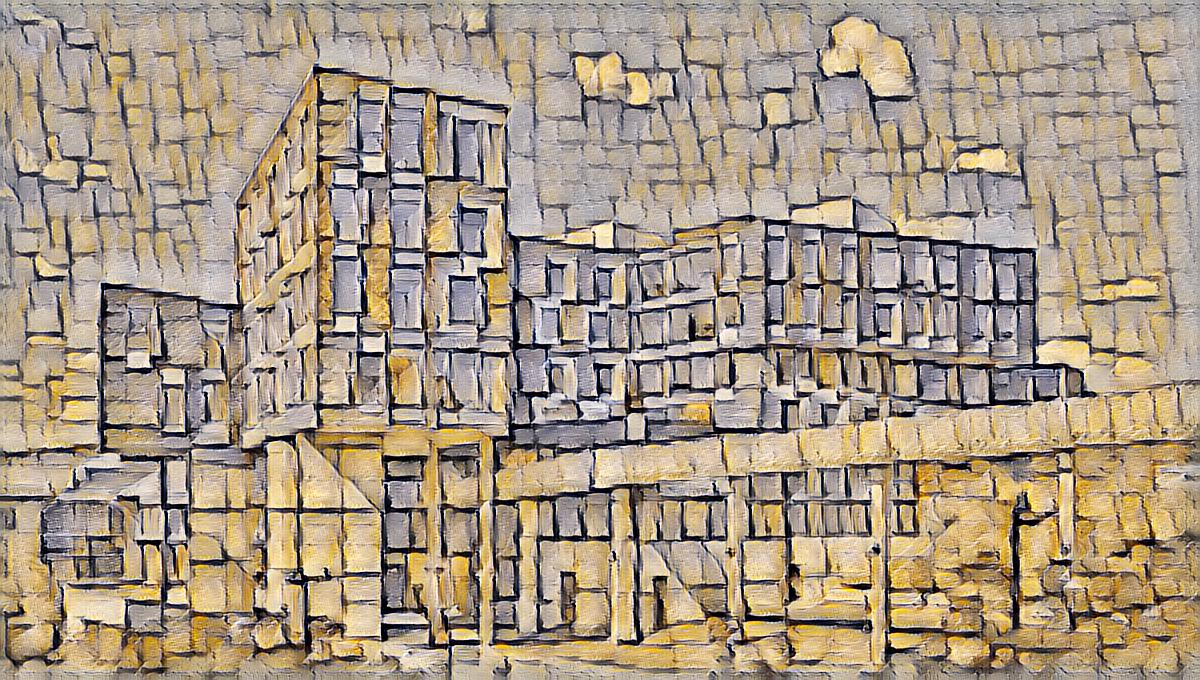

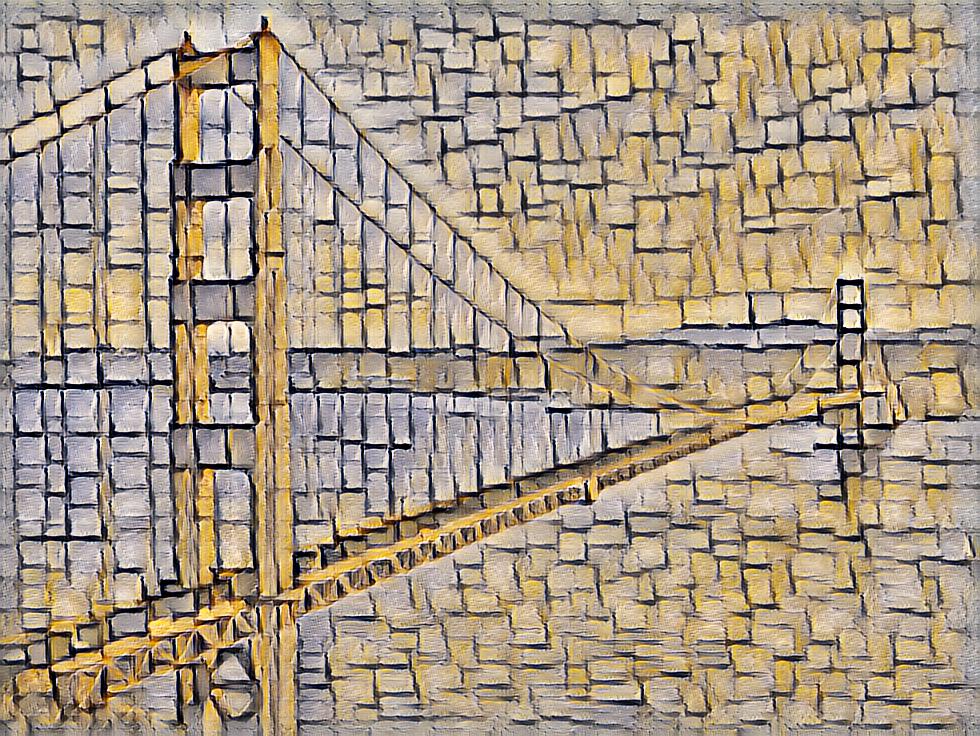

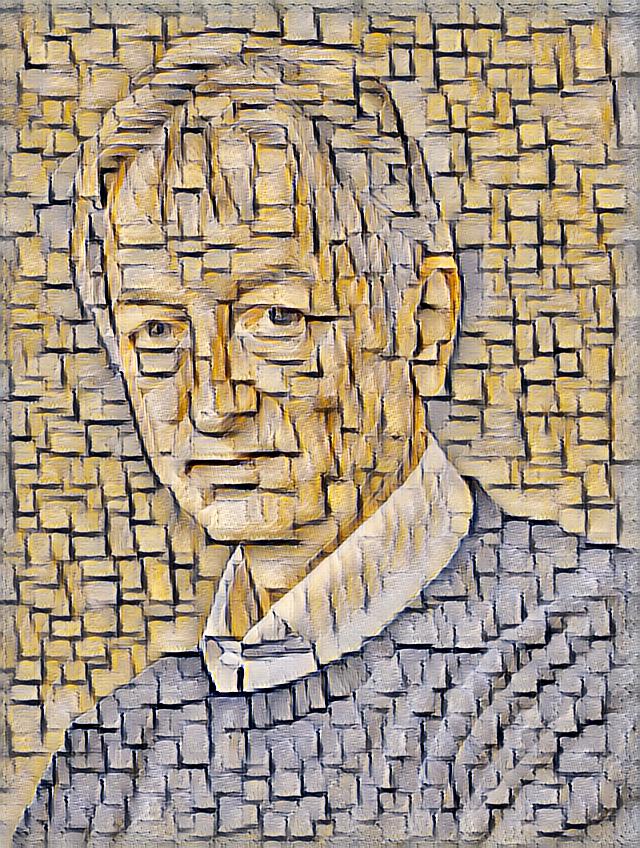

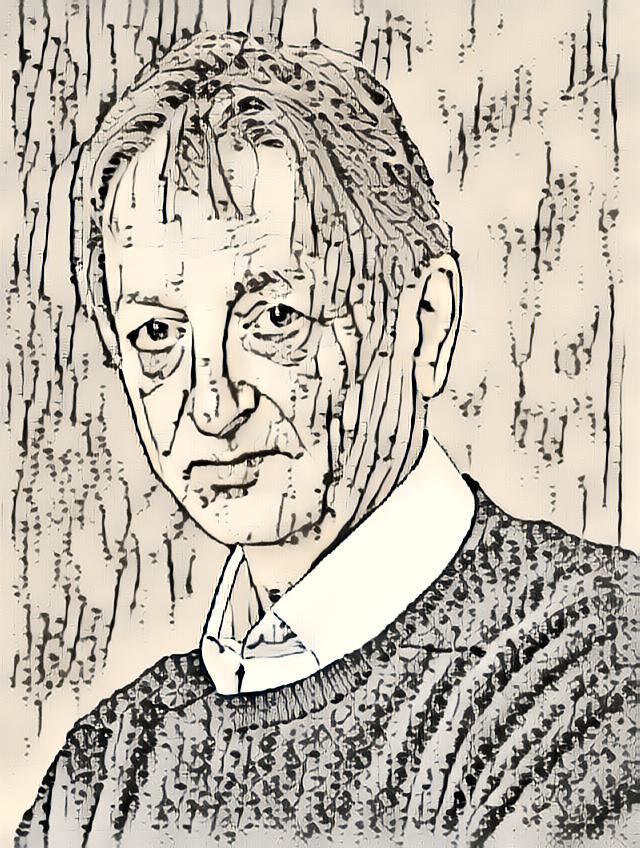

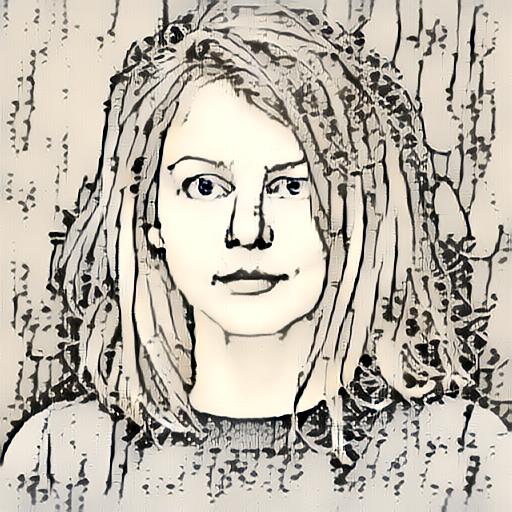

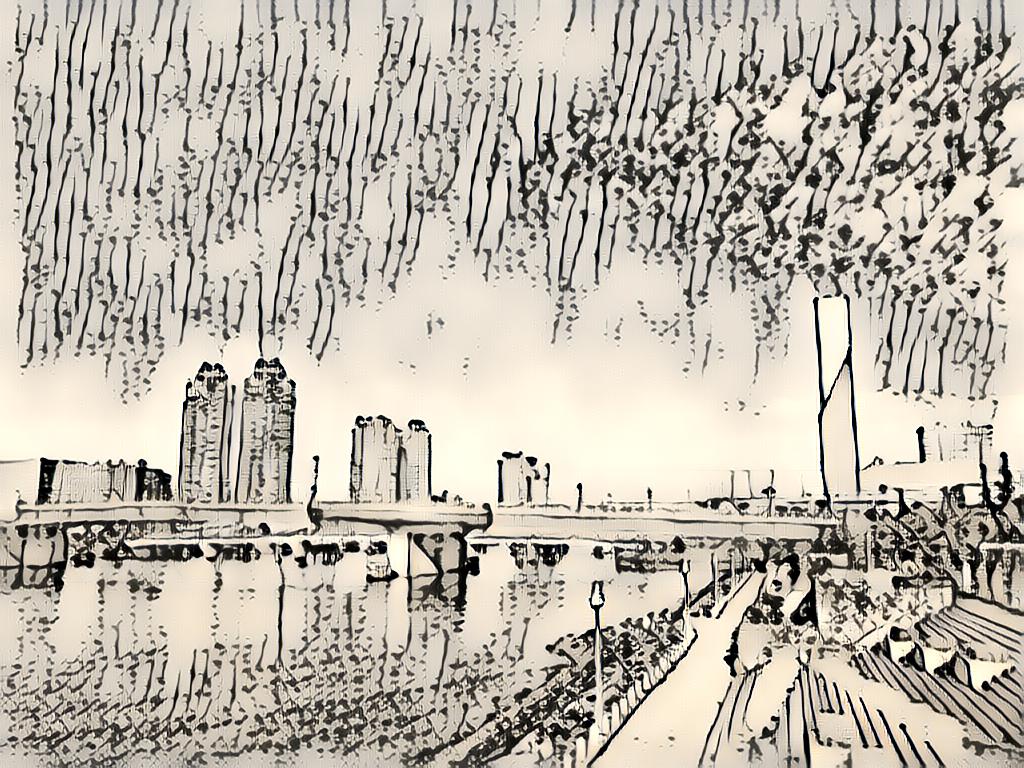

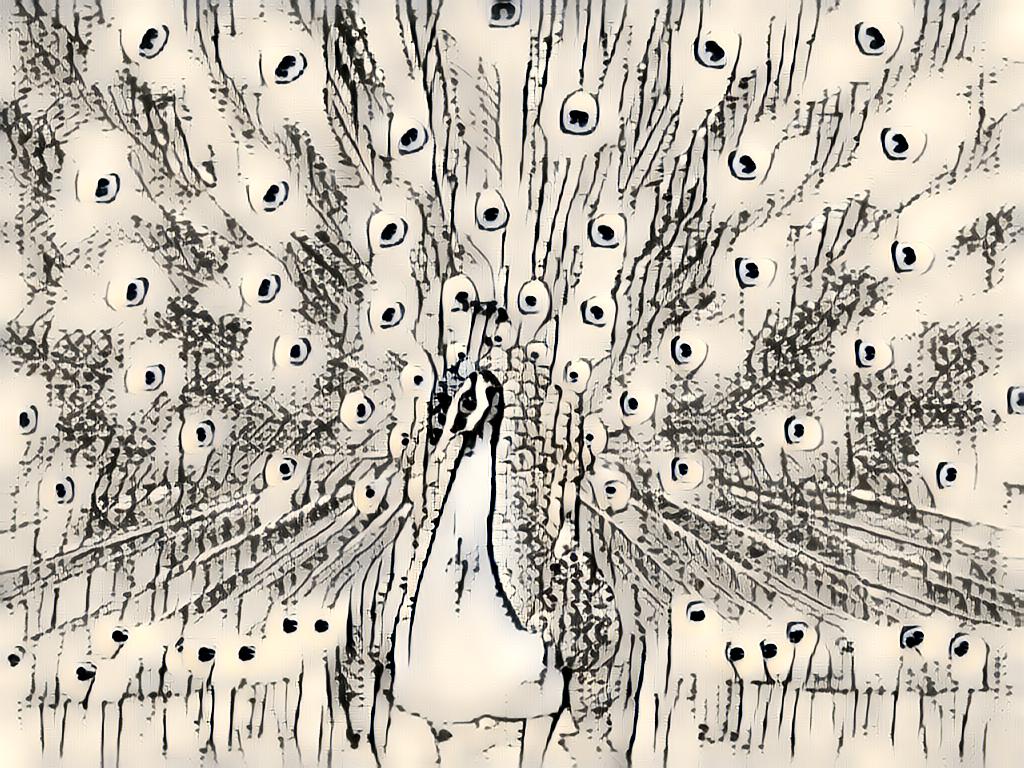

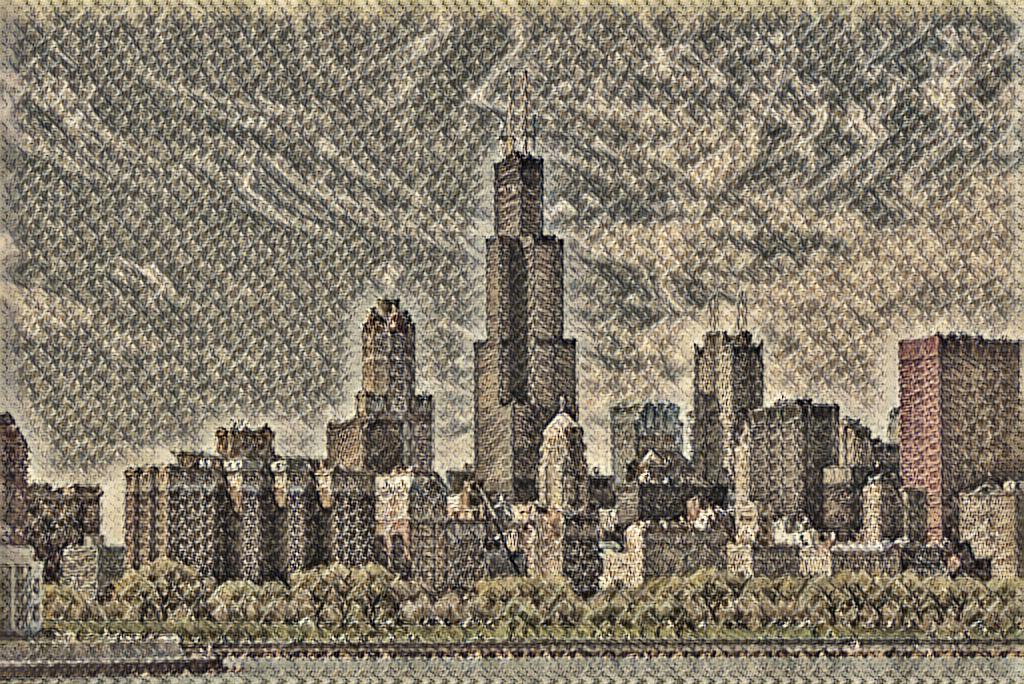

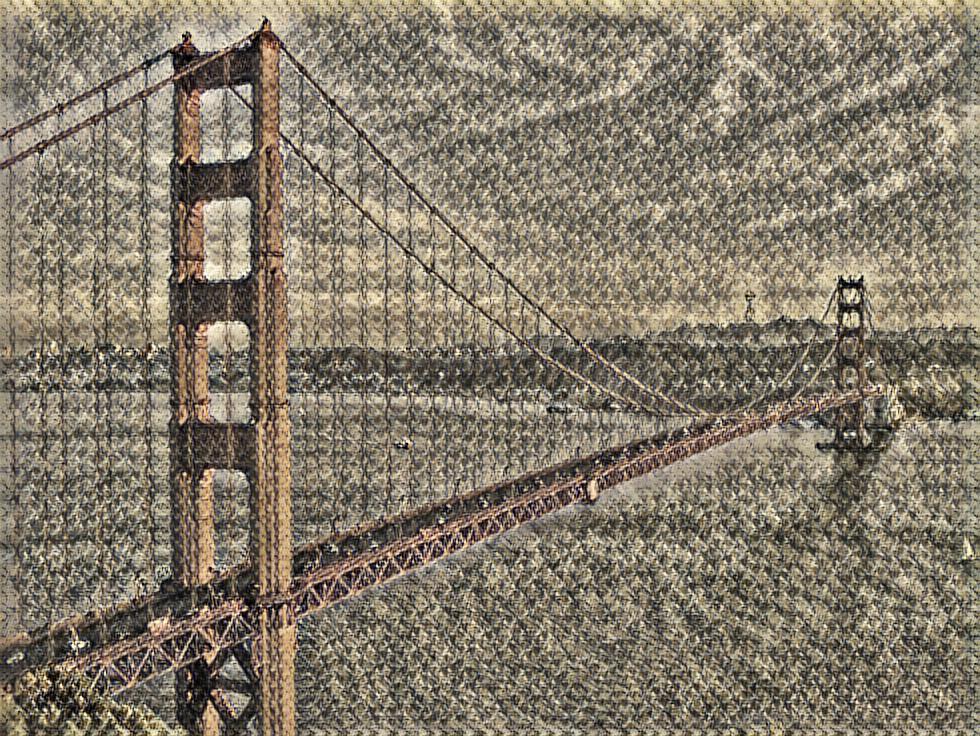

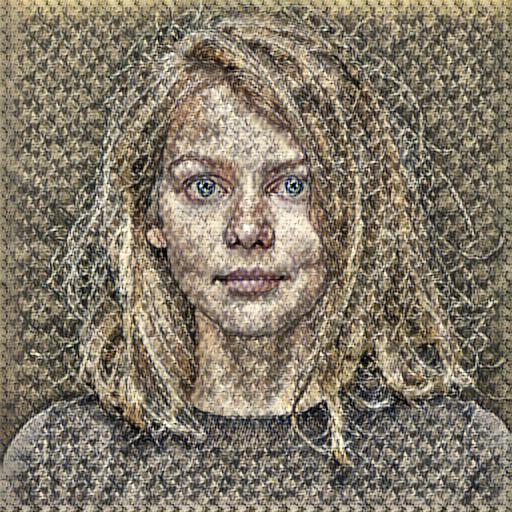

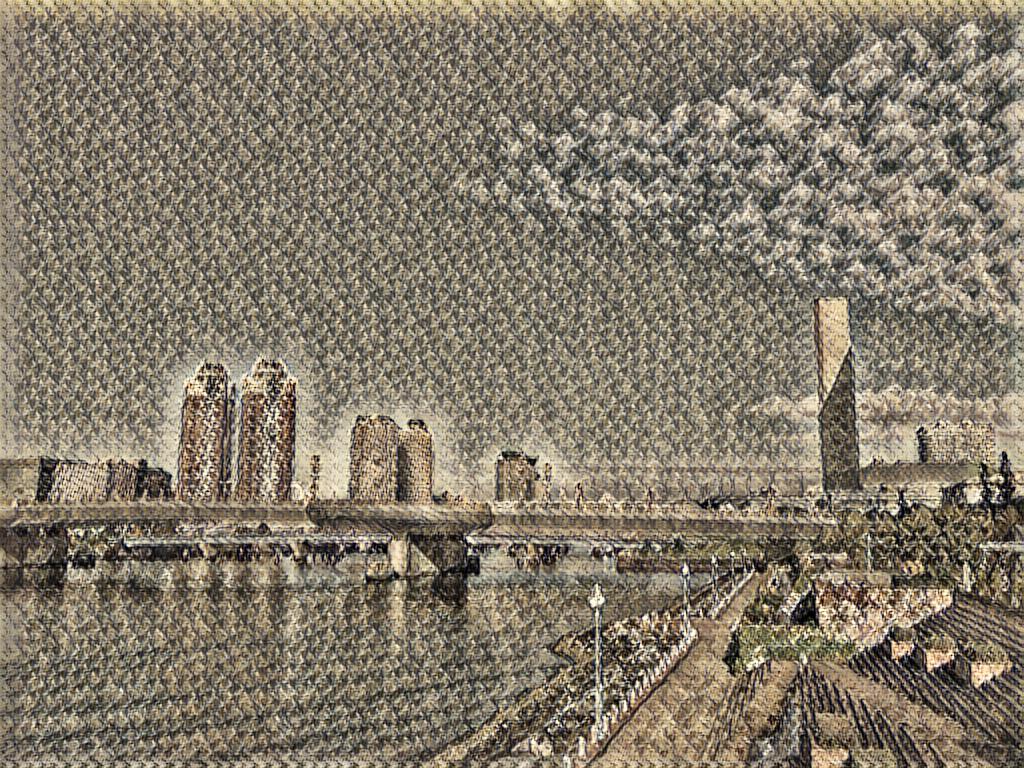

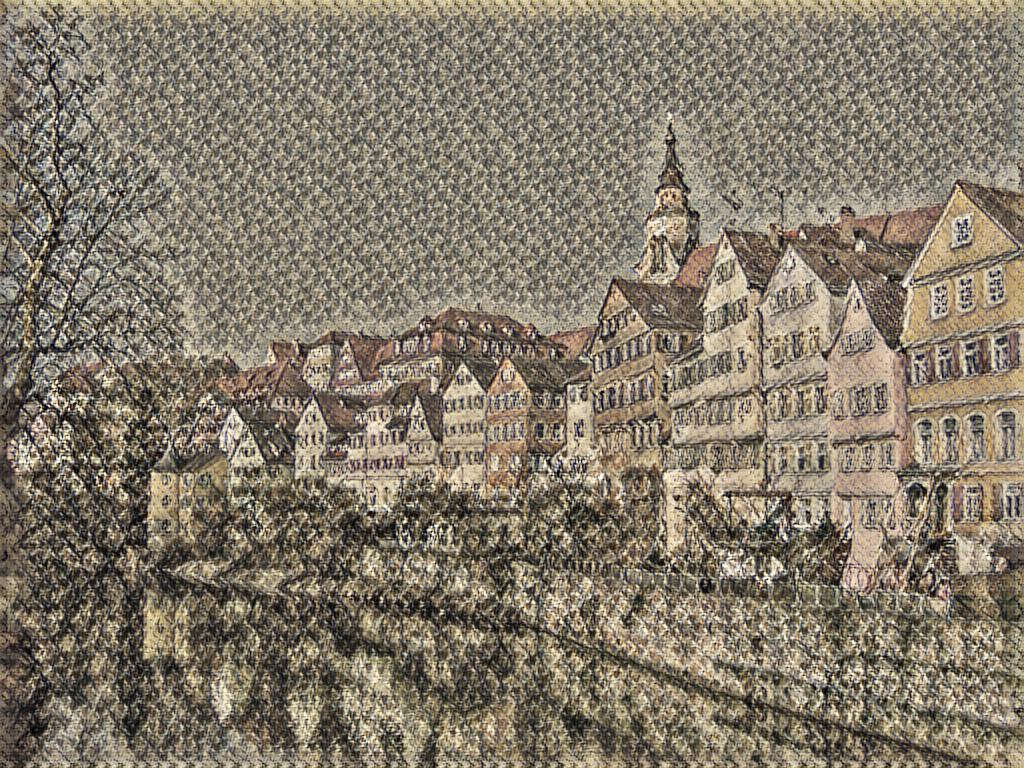

The remaining images on this page are not so huge, and were processed in about 100 milliseconds. The original images are shown below.

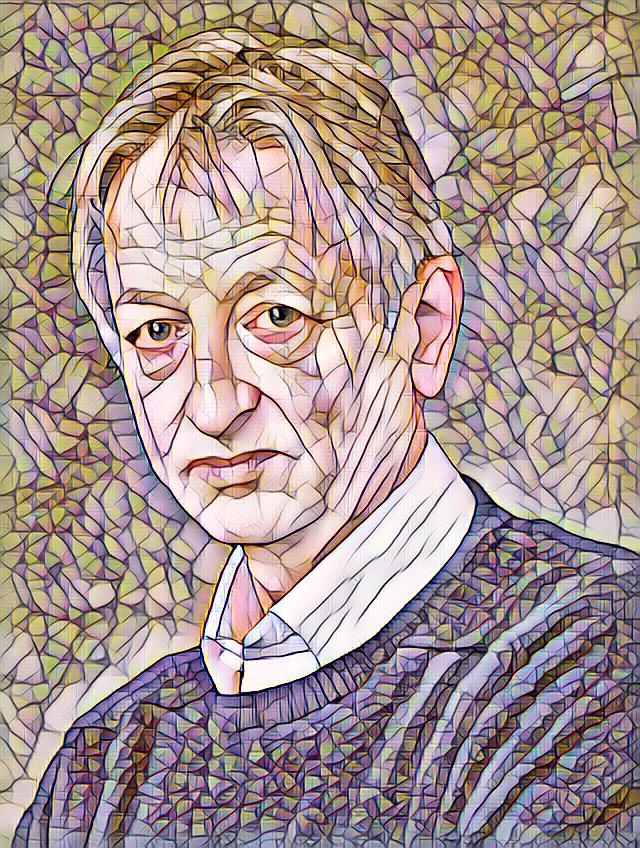

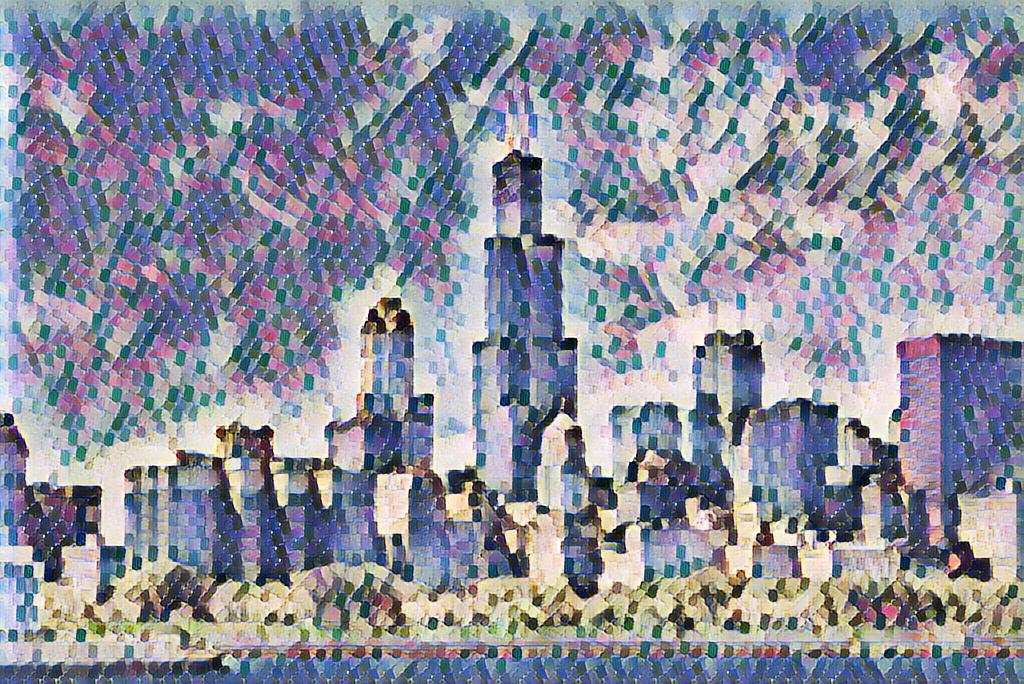

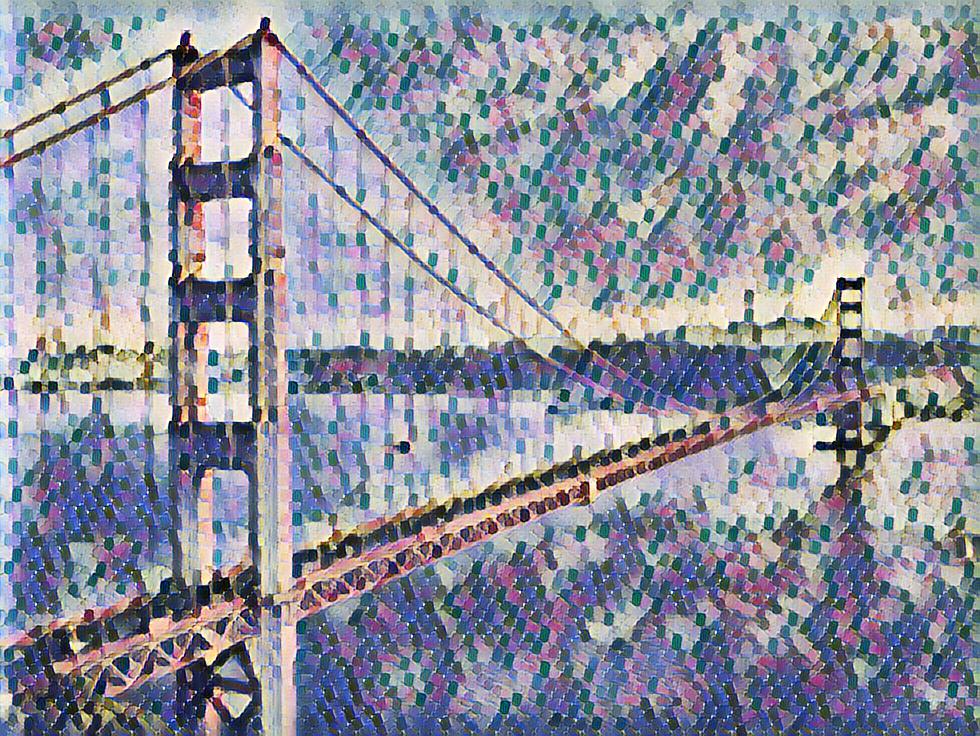

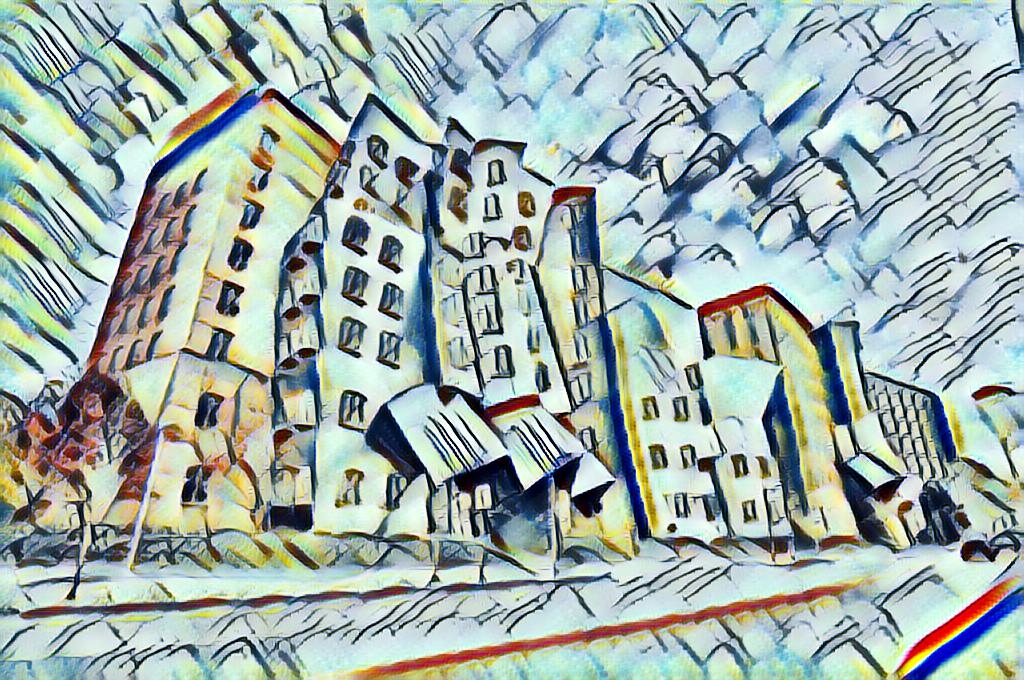

Next are these images modified by various styles. For each style, the trained model file is provided, and the first image in each section is the style image used for the model. Unless specified otherwise, default values were used for training arguments.

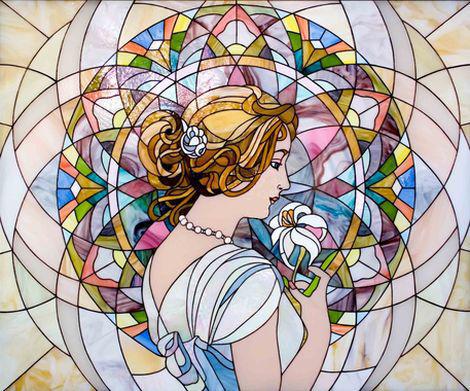

1 Stained Glass

[model]

For this model, the style weight was set to 0.0001.

2 Composition X Wassily Kandinsky (1939)

[model]

For this model, the style size was set to 512.

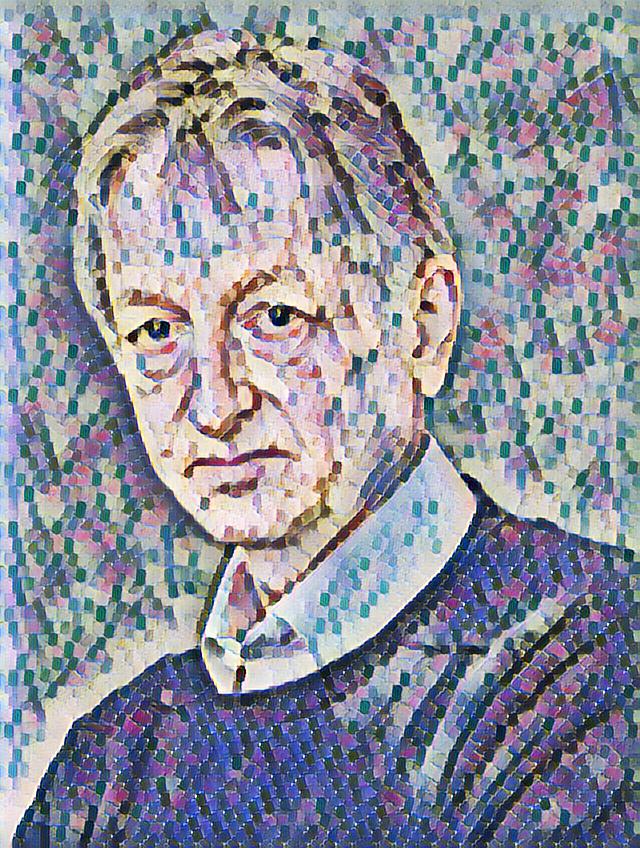

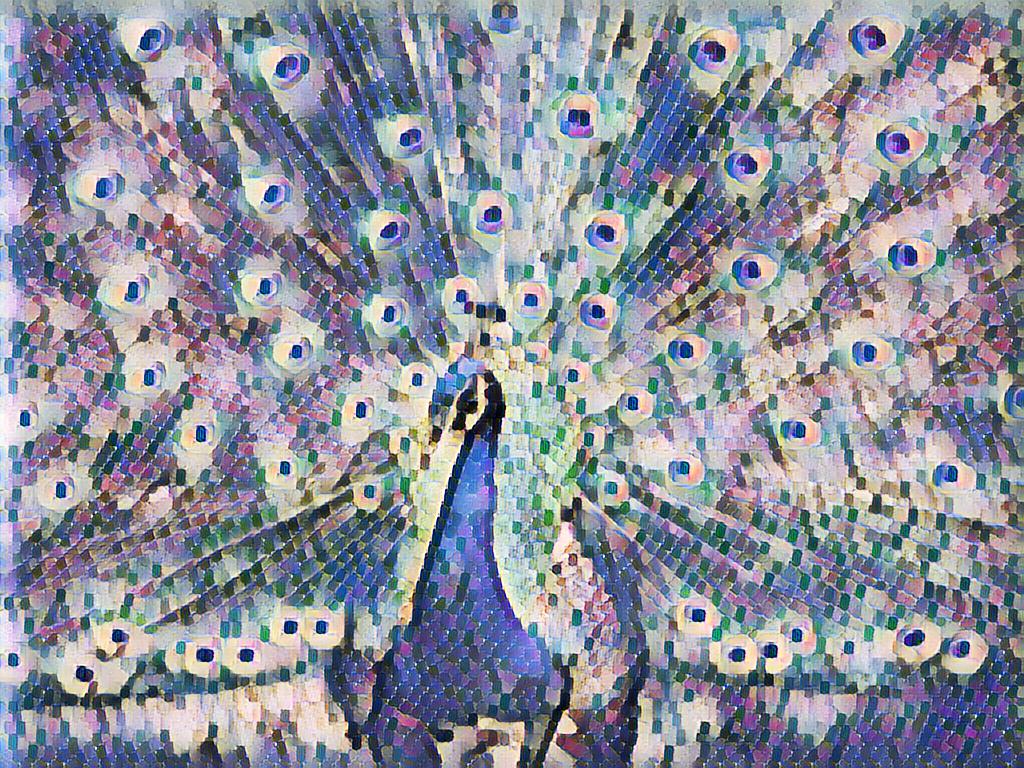

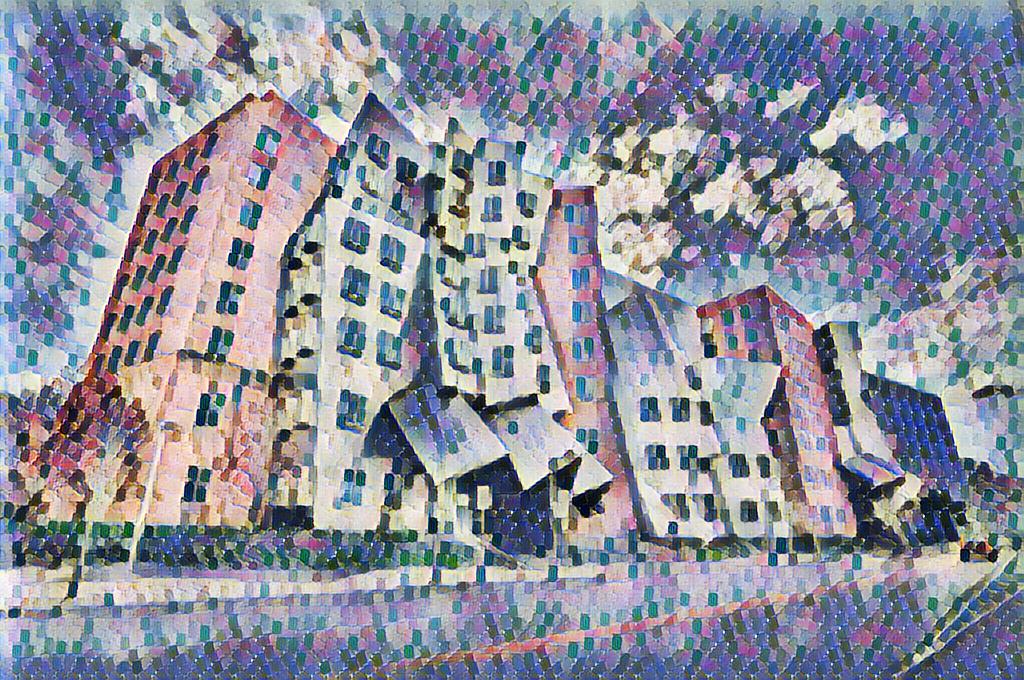

3 Portrait de Jean Metzinger Robert Delaunay (1906)

[model]

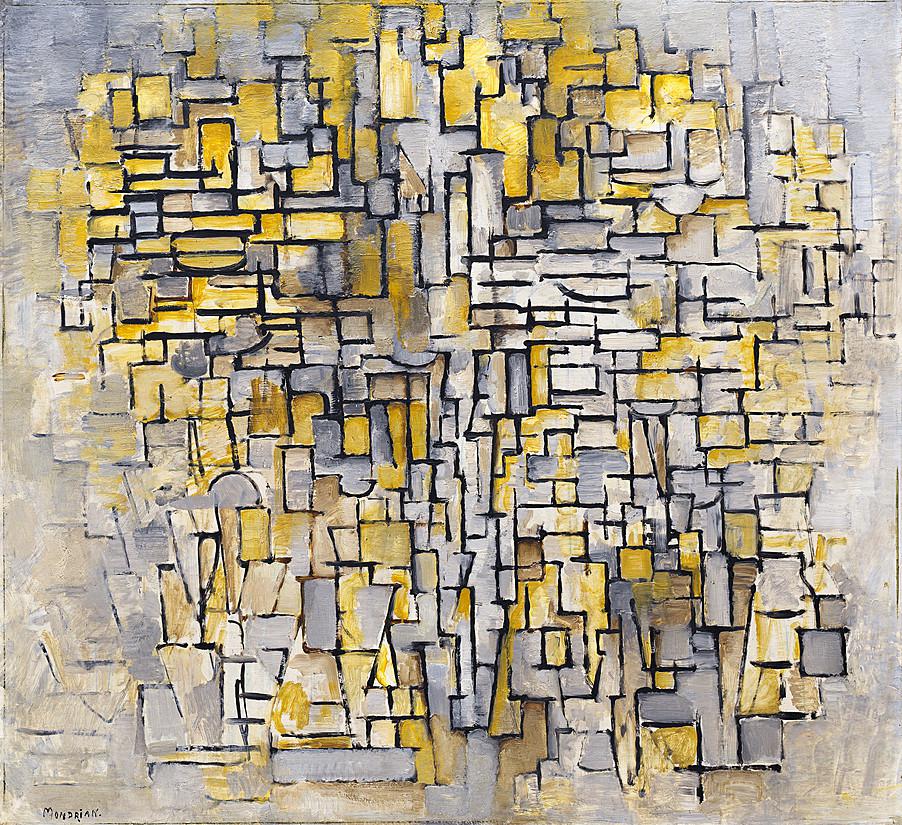

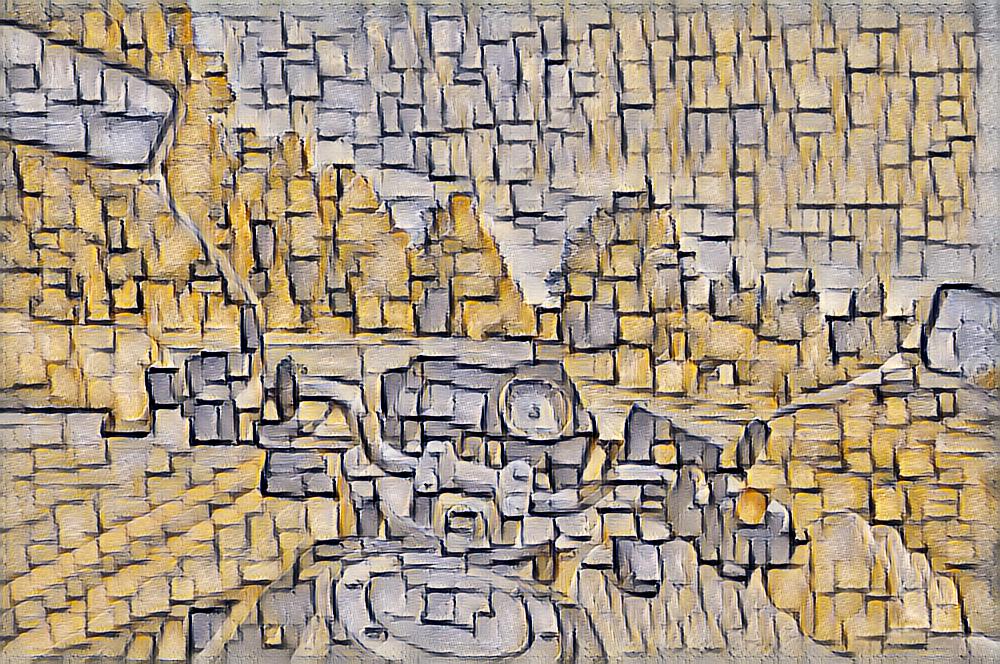

4 Composition XIV Piet Mondrian (1913)

[model]

For this model, the content weight was set to 10.

5 Udnie Francis Picabia (1913)

[model]

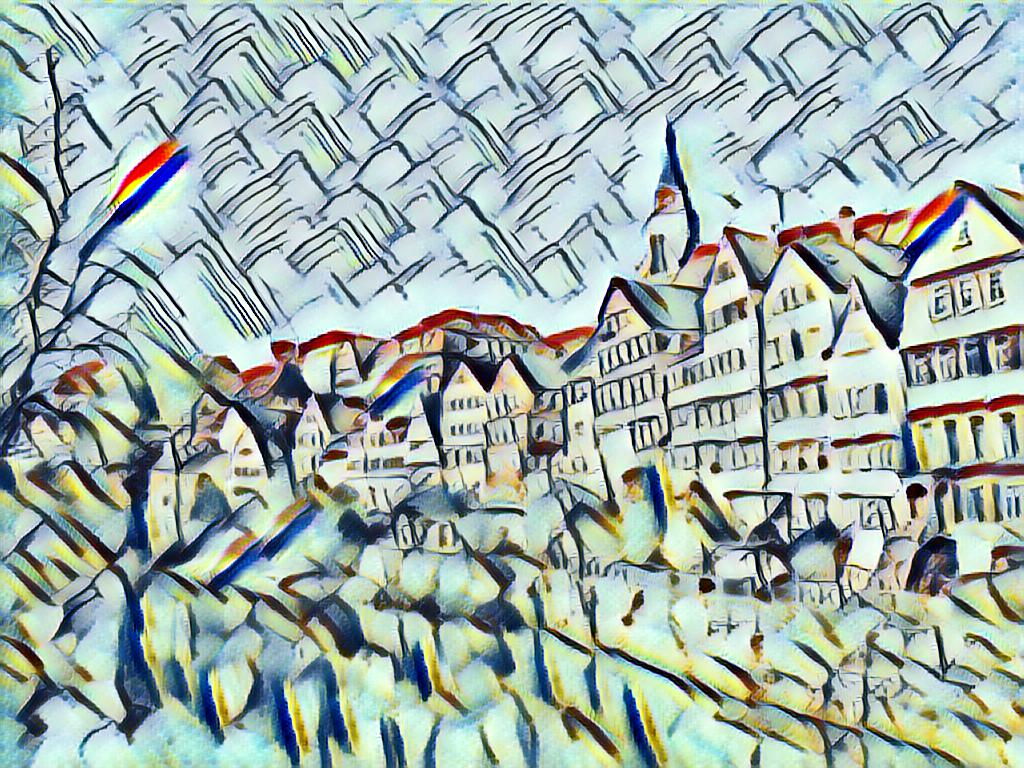

6 Untitled, CR1091 Jackson Pollock (1951)

[model]

For this model, the content weight was set to 5, the style weight was set to 0.0001, and the style size was set to 400.

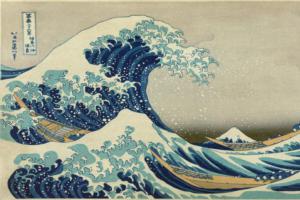

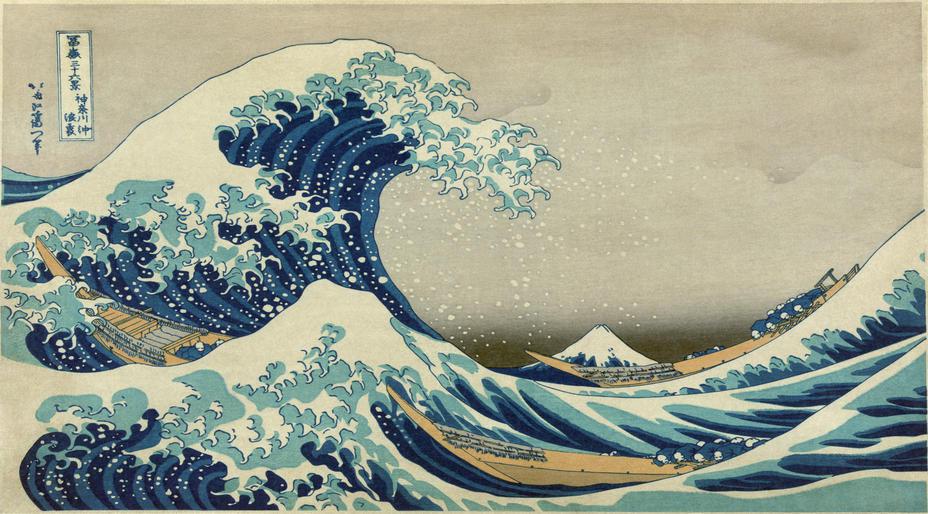

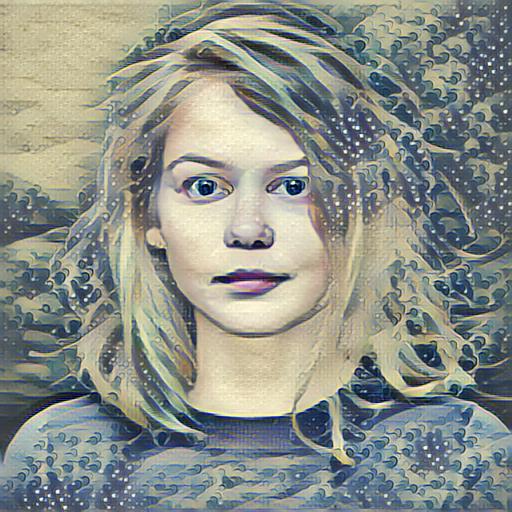

7 The Great Wave off Kanagawa Hokusai (1830–1833)

[model]

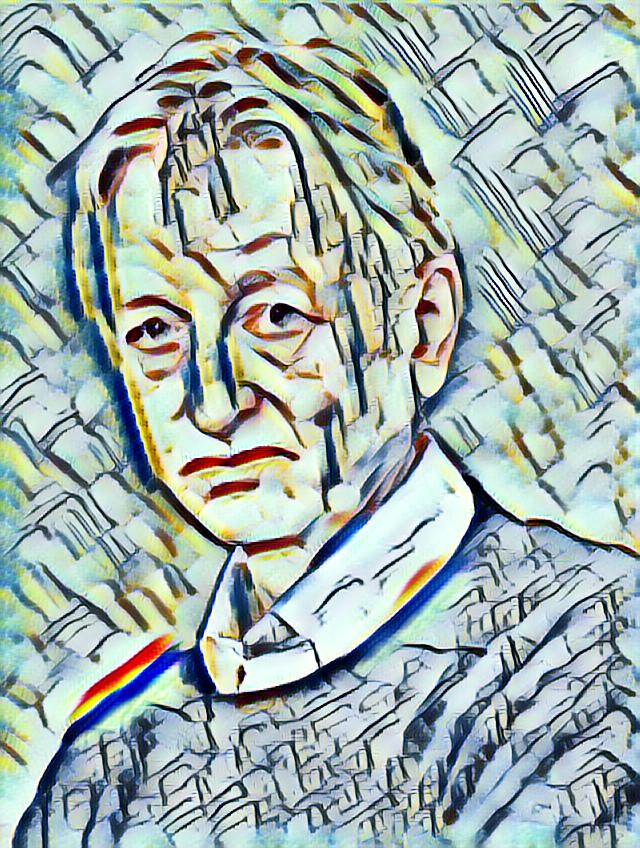

8 Cossacks Wassily Kandinsky (1910)

[model]

For this model, the content weight was set to 10.

9 Flames

[model]

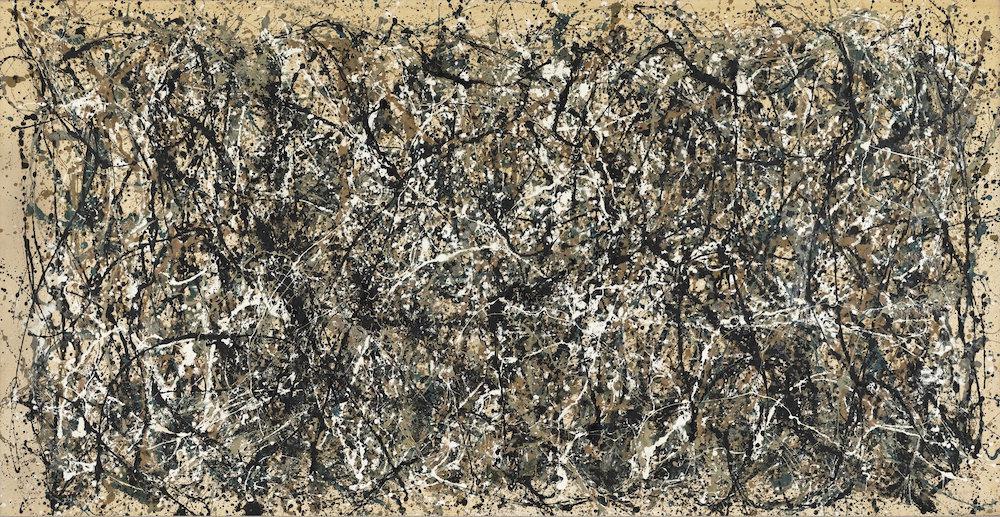

10 One: Number 31 Jackson Pollock (1950)

[model]